Overview of Data Platforms

A data platform is an integrated set of technologies that collectively enable the collection, storage, processing, management, and delivery of data throughout an organization. It serves as the foundational infrastructure that supports data engineering, data analytics, machine learning, and data-driven decision-making processes.

Why Data Platforms are Essential

In today's data-driven world, organizations generate and consume vast amounts of data from various sources. A data platform provides a unified and scalable environment to handle this data efficiently, enabling organizations to:

- Make Informed Decisions: By providing timely and accurate data, organizations can make evidence-based decisions.

- Enhance Operational Efficiency: Automate data workflows to reduce manual effort and errors.

- Foster Innovation: Enable data scientists and analysts to explore data and develop new insights or products.

- Maintain Data Governance and Compliance: Ensure data security, privacy, and compliance with regulations.

Core Components of a Data Platform

A robust data platform typically consists of several key components, each serving a specific purpose in the data lifecycle:

1. Data Ingestion

This layer is responsible for collecting data from various sources, which can include databases, APIs, files, and streaming data.

- Batch Ingestion: Periodically transferring large volumes of data.

- Real-time Ingestion: Capturing data continuously as it is generated.

2. Storage

Stores the ingested data in a reliable and scalable manner.

- Data Lakes: Store raw, unprocessed data in its native format.

- Data Warehouses: Store processed, structured data optimized for querying and analysis.

- Databases: Traditional relational or NoSQL databases for transactional data.

3. Processing and Transformation

Transforms raw data into a usable format through cleaning, enrichment, aggregation, and formatting.

- ETL/ELT Processes: Extract, Transform, Load or Extract, Load, Transform workflows.

- Stream Processing: Real-time data transformations.

4. Orchestration

Manages the scheduling, execution, and monitoring of data workflows.

- Workflow Management: Define and manage complex data pipelines.

- Dependency Handling: Ensure tasks execute in the correct order.

- Error Handling and Recovery: Robust mechanisms to handle failures.

5. Access Layer

Provides mechanisms for data consumption by end-users or applications.

- APIs and Services: Enable programmatic access to data.

- Business Intelligence Tools: Support data visualization and reporting.

- Data Catalogs: Facilitate data discovery and understanding.

6. Data Governance and Security Layer

Ensures data is secure, compliant, and of high quality.

- Access Control: Manage who can access what data.

- Data Quality Management: Validate and monitor data integrity.

- Compliance and Auditing: Ensure adherence to regulations like GDPR or HIPAA.

7. Analytics and Machine Learning Layer

Enables advanced data analytics and machine learning capabilities.

- Analytics Platforms: Tools for complex data analysis and visualization.

- Machine Learning Frameworks: Support model development and deployment.

- Model Serving: Deploy trained models for inference in production systems.

8. Monitoring and Logging Layer

Provides visibility into the operation of the data platform.

- System Monitoring: Track the performance and health of infrastructure.

- Data Pipeline Monitoring: Observe the flow and processing of data.

- Logging: Record system and application logs for troubleshooting.

9. Infrastructure Layer

The underlying hardware and software that support all other layers.

- Cloud Services: AWS, Azure, GCP offerings for scalable resources.

- On-Premises Servers: Physical servers managed internally.

- Containerization and Orchestration: Docker and Kubernetes for deploying applications.

Key Features of a Data Platform

- Scalability: Ability to handle increasing amounts of data and users without compromising performance.

- Flexibility: Support for various data types and processing paradigms (batch and real-time).

- Reliability: Ensuring data is accurate, consistent, and available when needed.

- Performance: Optimized for fast data processing and query execution.

- Security: Robust mechanisms to protect data from unauthorized access and breaches.

How Data Platforms Enable Data Engineering

Data platforms are the backbone of data engineering efforts, providing the tools and infrastructure necessary to build and maintain data pipelines. They enable data engineers to:

- Automate Data Workflows: Use orchestration tools to schedule and manage pipelines.

- Process Data Efficiently: Utilize powerful processing engines for transforming data.

- Ensure Data Quality: Implement validation and cleansing processes.

- Collaborate Across Teams: Provide a unified environment for different roles to work together.

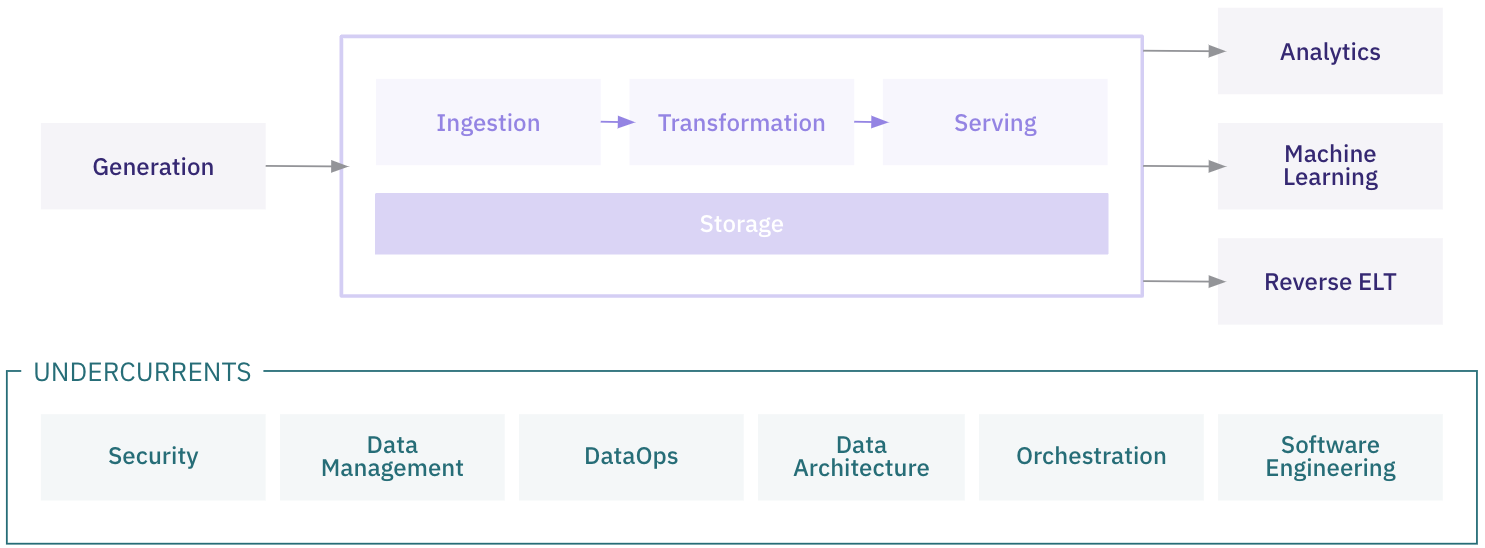

The Role of Data Platforms in the Data Engineering Lifecycle

A data platform supports every stage of the data engineering lifecycle:

- Generation: Captures data from various sources.

- Ingestion: Brings data into the platform.

- Storage: Holds data securely and durably.

- Transformation: Converts data into usable formats.

- Serving: Makes data accessible to consumers.

- Analytics: Supports data analysis and visualization.

- Machine Learning: Facilitates the development and deployment of ML models.

- Reverse ETL: Sends processed data back to operational systems.

Conclusion

A data platform is more than just a collection of tools; it's an ecosystem that enables organizations to leverage their data assets fully. By integrating various technologies and processes, a data platform facilitates the smooth flow of data from its origin to its ultimate use in decision-making and innovation.